Transformers: From Foundational Research To Real World Applications

AI and the Transformer: A Powerful Duo Revolutionizing Natural Language Processing

Artificial intelligence (AI) has made significant strides in recent years, and deep learning has been a key driver of this progress.

One of the most exciting advancements in deep learning is the transformer architecture, which has revolutionized the field of natural language processing (NLP).

What is Deep Learning?

A branch of artificial intelligence called deep learning trains multilayer artificial neural networks. These layers, which can recognize intricate patterns from data, are modeled after the organization of the human brain.

Deep learning models have demonstrated impressive performance across a range of tasks, such as natural language processing, audio recognition, and picture identification.

What is a Transformer?

One particular kind of neural network design that has taken center stage in NLP is called a transformer. Transformers have the ability to examine every segment of an input sequence at once, in contrast to conventional recurrent neural networks (RNNs), which process data in a sequential manner. This enables them to record long-range word dependencies, which is essential for deciphering sentence meaning.

How Transformers Work

Transformers use a technique called attention, which enables the model to concentrate on the portions of the input sequence that are most pertinent to the task at hand.

This is comparable to how people pay attention to particular details in a discussion in order to decipher the meaning.

Transformers are composed of two primary components:

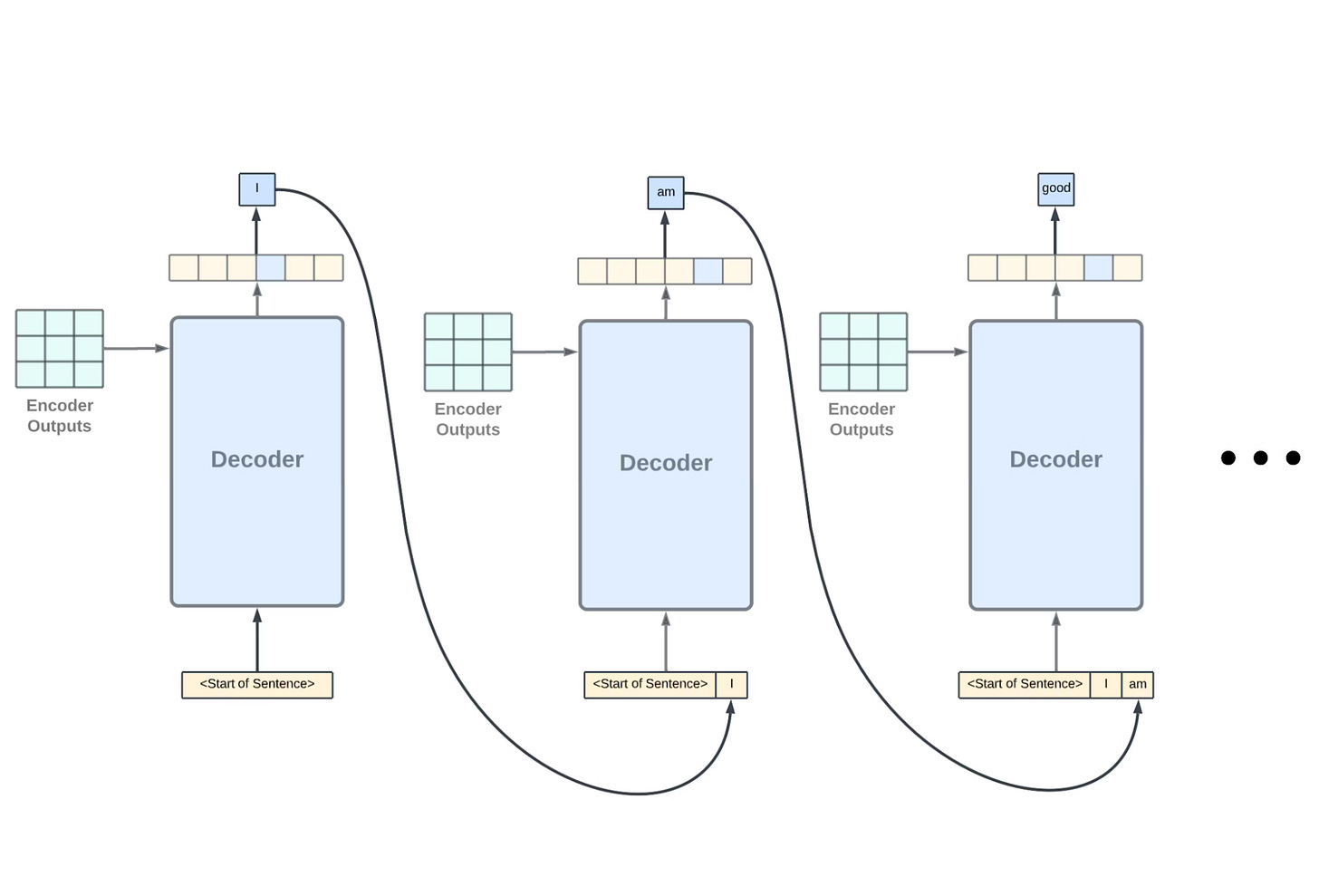

Encoder: The encoder takes the input sequence and processes it to generate a representation of the entire sequence.

Decoder: The decoder uses the encoder's output and generates the desired output sequence, such as a translation in machine translation or a response in a chatbot conversation

Going Deeper: Understanding the Transformer Architecture

The attention mechanism is the central idea of transformers. Transformers have the ability to process every element of the input sequence at once, in contrast to conventional RNNs that process data one step at a time. Assume you are a sentence reader.

A transformer can evaluate the entire sentence at once, recognizing how various words relate to one another, whereas an RNN would assess each word individually.

This is achieved through two key components:

Query, Key, and Value Vectors:

Each word in the input sequence is represented by three vectors:

Query Vector: This represents the current "question" or focus of attention.

Key Vector: This represents the content of each word in the sequence.

Value Vector: This also represents the content of each word but in a way relevant to the query.

Attention Scores:

The transformer calculates an attention score for each pair of words in the sequence.

This score indicates how relevant a particular word (represented by its key vector) is to the current query (represented by the query vector).

By focusing on words with high attention scores, the transformer can effectively capture long-range dependencies between words, even if they are far apart in the sequence.

This is crucial for tasks like machine translation, where understanding the relationship between words across the entire sentence is essential for producing an accurate translation.

Exploring Specific Applications of Transformers

We've briefly touched on applications like machine translation and text summarization. Here's a deeper look at some specific transformer-powered tools:

GPT-3 (Generative Pre-trained Transformer 3):

With the help of OpenAI's robust language model, you can create imaginative and realistic text formats, translate between languages, produce a variety of innovative content types, and get useful answers to your inquiries. It's a great illustration of how transformers are applied to large-scale language production.

BERT (Bidirectional Encoder Representations from Transformers)

BERT is a pre-trained transformer model created by Google AI that is very good at figuring out the connections between words in a text. It is employed in a number of NLP tasks, such as sentiment analysis and question answering.

XLNet

This transformer architecture, also by Google AI, addresses some limitations of BERT by allowing bidirectional training.

This means the model considers the context of both the previous and following words in a sentence, leading to a more nuanced understanding of language.

Getting Started with Transformers: Resources and Tools

If you're interested in learning more about transformers or even experimenting with them yourself, here are some helpful resources:

Hugging Face Transformers Library: This open-source library provides pre-trained transformer models and tools for developers to fine-tune and use them for various NLP tasks.

Google AI Blog: Google AI publishes informative blog posts about transformers and other advancements in deep learning.

Online Courses: Several online platforms offer courses and tutorials on transformers, catering to both beginners and experienced programmers

Transformers Beyond the Hype: Niche Applications

While machine translation and text summarization are widely known uses of transformers, here are some lesser-known but impactful applications:

Drug Discovery: Transformers are being used to sift through voluminous scientific literature in order to find novel applications for current medications or uncover prospective drug targets. Drug discovery methods may be sped up by their capacity to comprehend intricate linkages found in literature.

Code Generation: Code repositories are used to train transformer models in order to produce human-quality code. By automating tedious operations and assisting developers in writing more effective code, this might completely transform the software development industry.

Music Composition: Researchers are exploring transformers for music generation. By analyzing existing music pieces, transformers can create new musical compositions with different styles and genres.

Theoretical Foundations of Transformers: A Peek Under the Hood

Understanding the core concepts behind transformers can provide a deeper appreciation of their capabilities. Here's a glimpse into some key theoretical aspects:

Self-Attention vs. Encoder-Decoder Attention:

Transformers work mainly with a self-attention mechanism, in which every element in the input sequence pays attention to every other element. -

This contrasts with the machine translation approach known as encoder-decoder attention, in which the encoder concentrates on the source language and the decoder concentrates on the target language.

Understanding Positional Encoding:

Transformers lack RNNs' innate comprehension of word order since they process every segment of the sequence at once. Positional encoding is a technique used to address this.

By doing this, the model is updated with data regarding the relative positions of words within the sequence. Different positional encoding strategies exist, and more efficient techniques are always being investigated by researchers.

Multi-Head Attention: A Powerful Extension

The transformer can simultaneously learn numerous attention patterns thanks to multi-head attention, which is an extension of the core attention process.

Consider having several "heads" that each concentrate on a certain facet of the connections between words. This enables the model to take into account several possible links between words, capturing a fuller grasp of the input sequence.

These theoretical ideas serve as the foundation for transformers, enabling them to carry out challenging tasks and produce cutting-edge outcomes in a range of NLP applications.

Transformers: Pushing the Boundaries of Research

Researchers are constantly exploring new frontiers with transformers. Here are some intriguing areas of investigation:

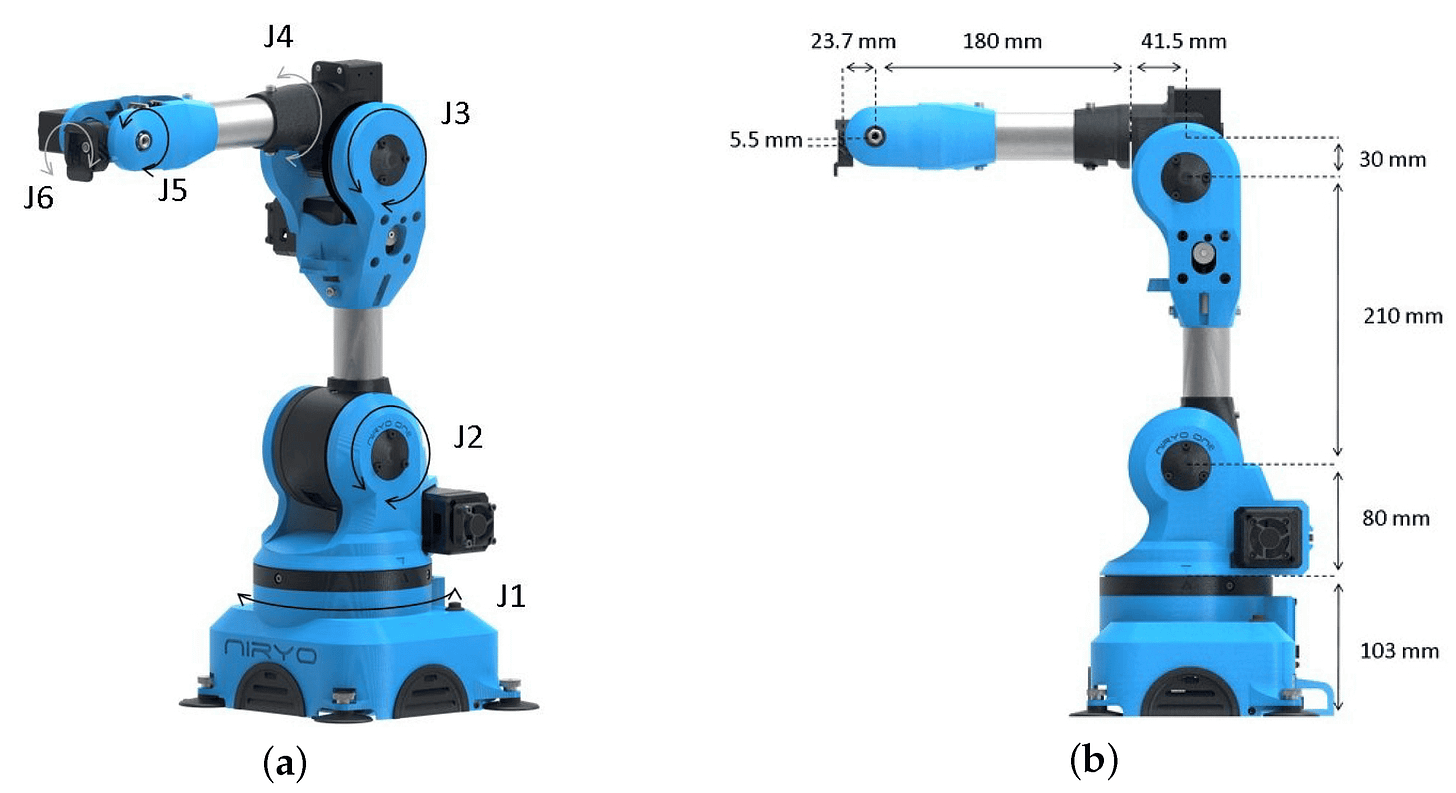

Transformers for Robotics: Can transformers be used to enable robots to better understand and respond to natural language instructions? This could revolutionize human-robot interaction.

Transformer-based Reasoning: Can transformers be incorporated into AI systems to enable more sophisticated reasoning capabilities? This could lead to the development of AI systems that can solve problems and make decisions in complex scenarios.

Explainable Transformers: As mentioned earlier, transformers can be like black boxes. Research on making them more interpretable is crucial for building trust and understanding how these models arrive at their outputs.

Attention is Everything: A Deep Dive into Attention Mechanisms

The core of a transformer's power lies in its attention mechanism. It's not magic, but it's pretty darn close! Here's a more technical breakdown:

Attention Scores: Transformers, as previously said, assign attention scores to each word pair in the sequence. The relevance of a single word (value vector) to the current focus (query vector) is represented by these scores.

Softmax Function: After that, a softmax function is applied to the attention scores, thereby turning them into probabilities. By doing this, the attention weights are guaranteed to always add up to 1, dividing the model's attention among pertinent segments of the sequence.

Scaled Dot-Product Attention: This is the primary attention operation known in technical terms. It determines the dot product of the key vectors and the query, scaling it by a factor to avoid ballooning gradients (a training problem).

Transformers in Action: Code Examples (get ready for some pseudocode!)

Seeing transformers in action can solidify your understanding. Here's a simplified example (using pseudocode) of a single attention layer:

In this example, query, key, and value represent vectors for each word in the sequence. The @ symbol denotes matrix multiplication, and T denotes the transpose operation.

This code snippet essentially shows how the attention mechanism calculates weights for each value vector based on its relevance to the current query and returns a weighted sum.

The Hype is Real: But It's Just the Beginning

Transformers have revolutionized NLP, but they're still young. Here are some exciting areas where transformer research is exploding:

Transformers for Video Understanding: Is it possible to train transformers to watch videos, analyze them, and provide captions or synopses? This might completely change how people search for and retrieve videos.

Creativity and Generative AI: Transformers are used to create a variety of artistic text formats, including scripts, poems, code, and musical compositions. New avenues for artistic expression and human-AI cooperation may result from this.

Transformers for Biology: We witnessed the application of transformers in drug discovery. Can they be used to forecast the effects of genetic changes on organisms or study the architectures of proteins? This has the potential to advance both biological research and tailored therapy.

Transformers for Creative Exploration:

Music and Art Powered by AI: Transformers are utilized to produce various artistic text formats and are capable of producing text content in other creative disciplines. They are capable of writing original poetry, screenplays, songs, and even visual art. Imagine working with an AI to create a poem or a piece of music that expresses your own artistic vision.

Storytelling and Game Design: Transformers can be used to create conversation, storylines, and stories for interactive fiction or games. This makes it possible to create more customized and engaging game experiences.

Transformers for Science and Research:

Protein Structure Prediction: We witnessed the application of transformers in drug discovery. They can also evaluate protein structures and other complex interactions. Transformers can help in protein folding prediction by analyzing protein sequences, which is a critical step in comprehending protein function and creating new medications.

Material Science Discovery: Transformers can speed up the discovery of new materials by analyzing large scientific databases to find patterns. This might result in the creation of novel materials with advantageous qualities, altering industries like sustainable building and clean energy.

Transformers for Social Good:

Combating Misinformation: Misinformation spreading on the internet is becoming a bigger problem. Transformers can be used to evaluate language and spot biased or fraudulent news. This has the potential to enable people to become more astute internet information consumers.

Personalized Education: By creating learning materials that are specific to each student's needs, transformers may make learning experiences more unique. Consider an AI instructor that can modify its approach to learning according to your areas of strength and weakness.

Practical Considerations for Using Transformers

While transformers offer immense capabilities, there are practical factors to consider when using them in real-world projects:

Computational Resources: Large transformer model training can be costly and demands a lot of processing power, particularly in contexts with limited resources. Model distillation—the process of teaching a smaller model to behave like a larger one—is one technique that can assist in lowering the computational requirements for deployment.

Data Requirements: Large volumes of labeled training data are frequently ideal for transformer performance. Techniques like data augmentation, which creates additional data points artificially from existing ones, and transfer learning, which fine-tunes a pre-trained model on a smaller dataset, can be used when labeled data is sparse.

Explainability and Bias: Transformers are susceptible to biases in the training data, just like any other AI model. To guarantee the equitable and responsible use of these models, it is imperative to utilize strategies such as bias identification and reduction.

Societal Impact of Transformers

The rise of transformers has significant societal implications, both positive and potential drawbacks to consider:

Accessibility and Inclusion: Cross-cultural communication can be promoted and language barriers dismantled with transformer-based language translation systems. This may encourage understanding and cooperation on a global scale.

Productivity and Efficiency: Transformer-powered advances in NLP can automate processes like information extraction and document summarization, increasing productivity across a range of industries.

Fairness and Bias: Transformers have the potential to reinforce biases found in the training data, just like any other AI tool. To reduce these biases and provide equal access to AI-powered technologies, it is imperative to create models that are more inclusive and egalitarian.

The Human Factor: The Role of Humans in the Transformer Revolution

While transformers are powerful tools, they are not a replacement for human intelligence and creativity. Here's how humans will continue to play a crucial role in the transformer revolution:

Guiding Development and Deployment: Humans are responsible for setting the ethical and societal parameters for transformer development and deployment. Ensuring fairness, transparency, and responsible use of these technologies is paramount.

Providing High-Quality Training Data: The quality of training data significantly impacts the performance and potential biases of transformers. Humans play a vital role in curating and annotating data to ensure transformers learn from accurate and unbiased sources.

Maintaining Control and Oversight: As transformers become more sophisticated, it's crucial to maintain human oversight and control. Humans will need to develop robust frameworks to ensure transformers are used for good and not for malicious purposes.

Collaboration and Synergy: The most exciting advancements are likely to emerge from the synergy between human and machine intelligence. Humans can leverage transformers to augment their capabilities, while transformers can benefit from human guidance and expertise.

Benefits of Transformers: Revolutionizing Natural Language Processing (NLP)

Transformers have emerged as a powerful and versatile architecture, revolutionizing the field of Natural Language Processing (NLP). Here's a breakdown of the key benefits they offer:

Superior Performance: Compared to traditional Recurrent Neural Networks (RNNs), transformers excel at capturing long-range dependencies within sequences. This allows them to achieve state-of-the-art results on various NLP tasks, such as machine translation, text summarization, and question answering.

Parallelization: Unlike RNNs that process information sequentially, transformers can analyze all parts of an input sequence simultaneously. This enables them to be parallelized more efficiently, significantly speeding up training times, a crucial factor for large and complex models.

Ability to Handle Complexities of Language: Transformers are adept at understanding the nuances of language, including the relationships between words and the context in which they are used. This makes them well-suited for tasks that require a deeper understanding of language, such as sentiment analysis and sarcasm detection.

Adaptability: The transformer architecture is flexible and can be adapted for various NLP tasks. By fine-tuning pre-trained transformer models on specific datasets, researchers and developers can create powerful tools for diverse applications.

Efficiency Gains: Advancements in transformer architectures are leading to more efficient models that require less computational power to train and operate. This opens doors for deploying transformers in real-world scenarios with resource constraints.

Foundation for Future Advancements: Transformers serve as a cornerstone for ongoing research in NLP. Their ability to learn complex relationships within data paves the way for further breakthroughs in AI and language understanding.

The Future of Transformers: A Symbiotic Relationship with AI

As AI research continues to evolve, transformers are likely to play an even more prominent role. Here are some intriguing possibilities for the future:

The Future of Transformers: Expanding the Horizon

Lifelong Learning Transformers: Current transformers are trained on static datasets. Researchers are exploring ways to create transformers that can continuously learn and improve over time, adapting to new information and situations. This would make them even more versatile and adaptable for real-world applications.

Neurosymbolic Transformers: This is an area of research that seeks to combine the strengths of transformers (statistical learning) with symbolic AI approaches (knowledge representation and reasoning). This could lead to the development of more powerful and human-like AI systems that can not only learn from data but also reason and understand the world in a more comprehensive way.

Multimodal Transformers: As mentioned earlier, transformers are primarily used for text data. Multimodal transformers aim to process and understand different modalities of data like text, images, and audio simultaneously. This could lead to the development of AI systems that can interact with the world in a more natural and human-like way.

Conclusion: A New Era of Human-Machine Collaboration

Transformers represent a significant leap forward in the field of AI. Their ability to process and generate language with unprecedented sophistication opens doors for groundbreaking advancements in various domains.

However, it's crucial to remember that transformers are tools, and like any tool, their value depends on how we use them.

By fostering responsible development, ethical considerations, and a focus on human-centered AI applications, we can harness the power of transformers to create a future where technology empowers us, expands our understanding of the world, and fuels our creative endeavors. The journey with transformers is far from over, and the possibilities are truly limitless

.