Linux System Admin Interview Questions

Top 20 most curated interview questions for a Linux System Administrator

Context

At its core, Linux is a Unix-like operating system kernel, the fundamental component responsible for managing hardware resources and facilitating communication between software applications and the computer's hardware. What sets Linux apart is its open-source nature, which means that its source code is freely available for anyone to inspect, modify, and distribute. This open model has given rise to a global community of developers, enthusiasts, and organizations contributing to its growth, fostering a collaborative environment that encourages continuous improvement and innovation.

The Linux kernel serves as the foundation upon which various Linux distributions, or distros, are built. These distributions package the Linux kernel with additional software components, libraries, and utilities to create a complete and user-friendly operating system. Popular Linux distributions include Ubuntu, Debian, Fedora, CentOS, and Arch Linux, each catering to different user preferences and requirements.

The Linux community, a diverse and passionate collective, plays a pivotal role in shaping the trajectory of the operating system. It encompasses developers, system administrators, educators, and enthusiasts who engage in online forums, mailing lists, and collaborative projects. The Linux community embodies the spirit of shared learning and support, welcoming newcomers and fostering an environment where individuals can grow their skills and contribute to the greater good.

As the world of technology evolves, Linux continues to evolve with it. The rise of containerization and orchestration technologies, exemplified by Docker and Kubernetes, has propelled Linux to the forefront of modern application deployment. Containers, encapsulating applications and their dependencies, leverage Linux namespaces and cgroups to provide lightweight and isolated environments, fostering portability and scalability.

Explain the difference between root and sudo users.

In a Linux system, both the root user and sudo (superuser do) are related to administrative privileges, but they differ in how they handle access to system-level commands and operations.

Root User:

The root user, often referred to as the superuser, is the administrative account with the highest level of privileges on a Unix-like operating system, including Linux.

The root user has the authority to execute any command and access any file or directory on the system. This level of access comes with significant responsibilities and risks, as any action taken by the root user has a direct impact on the system's stability and security.

The root user is typically used for system maintenance, configuration, and troubleshooting tasks. However, regular use of the root account is discouraged to prevent accidental damage to the system.

Sudo User:

The sudo (superuser do) command allows non-root users to execute specific commands with elevated privileges. It provides a way to delegate administrative tasks to trusted users without sharing the root password.

Users granted sudo privileges can perform administrative tasks as if they were the root user but only for the commands explicitly allowed in the sudo configuration.

To use sudo, a user needs to prefix the desired command with sudo and provide their own password. The user's privileges are elevated only for the duration of that specific command.

Granting sudo access is controlled through the

/etc/sudoersfile, and administrators can fine-tune which users or groups can execute specific commands with elevated privileges.

Key Differences:

Privilege Scope:

The root user has unrestricted access to all system resources and commands.

Sudo users have limited, delegated access to specific commands as defined in the sudoers configuration.

Password Authentication:

The root user typically requires the root password for authentication.

Sudo users authenticate with their own passwords before executing privileged commands.

Logging:

Actions performed by the root user are often logged as root, without specifying the original user.

Sudo logs each command execution, associating it with the specific user who used sudo to run the command.

Risk Management:

The root user has the potential for inadvertent or intentional system-wide changes, posing a higher risk.

Sudo users have a more controlled and auditable approach to executing privileged commands, reducing the risk associated with administrative tasks.

Example:

sudo su # Switches user to root

sudo apt update # Executes commands as the same user

#but with elevated privillagesHow do you manage user accounts and groups in Linux?

Managing user accounts and groups in Linux is a fundamental aspect of system administration. These commands provide a basic overview of user and group management in Linux.

Keep in mind that proper management of users and groups is crucial for maintaining system security and ensuring that users have appropriate access levels to resources on the system. Always adhere to the principle of least privilege to enhance system security.

You can use built-in commands like useradd, usermod, groupadd, and groupmod to:

Create, modify, and delete user accounts and groups.

Set account properties like home directory, shell, and password.

Assign users to groups and manage group permissions.

Creating User Accounts

# To create a new user, use the useradd command

# Set a password for the new user with the passwd command

sudo passwd usernameModifying User Properties

# To modify user properties, use the usermod command.

# For example, to add a user to a specific group:

sudo usermod -aG groupname usernameDeleting User Accounts

sudo userdel usernameManaging User Groups

# To create a new group, use the groupadd command

sudo groupadd groupname

# To add a user to an existing group, use usermod

sudo usermod -aG groupname username

#To remove a user from a group

sudo deluser username groupnameViewing User Information

# To view detailed information about a user, you can use the id command id usernameManaging Home Directories

sudo usermod -d /path/to/new/home usernameEditing /etc/passwd and etc/group files

The user account information is stored in the etc/passwd file, and group information is in the etc/group file. You can manually edit these files, but it's generally recommended to use dedicated commands to make changes.

Changing Ownership and Permissions

# To change the ownership of files or directories, use the chown command

# To change permissions, use the chmod command

sudo chown username:groupname filenameSwitching Users

su - usernameTemporary Elevation of Privileges

sudo <command>Describe file system hierarchy in Linux.

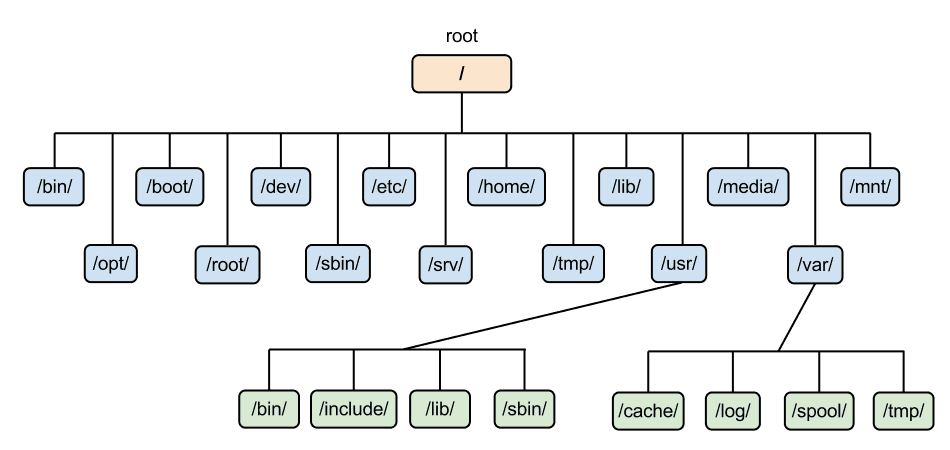

The / directory is the root, and everything else branches from it. Key directories include:

/bin and /sbin: Essential system binaries.

/boot: Bootloader files.

/dev: Device files for hardware access.

/etc: Configuration files for system services.

/home: Users' home directories.

/lib and /lib64: Shared libraries.

/media: Mount point for external media.

/opt: Third-party application directories.

/proc: Processes information.

/root: Root user's home directory.

/sys: System hardware information.

/tmp: Temporary files.

/usr: Application files and binaries.

/var: Variable data like logs and mail.

Explain the concept of permissions in Linux.

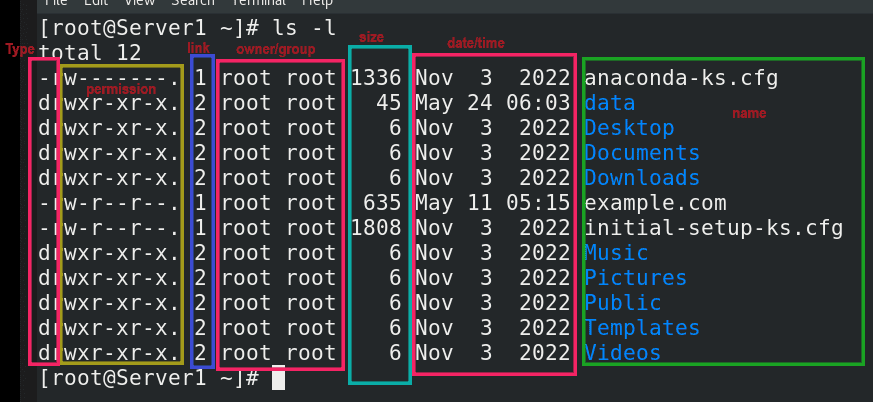

In Linux and other Unix-like operating systems, permissions are a fundamental aspect of the security model, governing how files and directories are accessed and manipulated. The permission system is designed to control who can do what with specific files and directories, enhancing the overall security and integrity of the system. Linux employs a set of permission attributes for each file and directory, dictating the actions that different users and groups can perform.

There are three primary types of permissions associated with files and directories:

Read (r):

Read permission allows a user to view the contents of a file or the names of files within a directory.

For directories, read permission enables listing the directory's contents.

Write (w):

Write permission grants the ability to modify the contents of a file or create, delete, and rename files within a directory.

For directories, write permission allows adding or removing files and subdirectories.

Execute (x):

Execute permission is necessary to run a file or enter a directory.

For directories, execute permission enables navigating into the directory.

These permissions are assigned to three different entities associated with each file or directory:

User (Owner):

The user who owns the file or directory.

The user can be the person who created the file or the user to whom the file's ownership has been explicitly assigned.

Group:

A group is a collection of users who share common permissions on files or directories.

The group ownership of a file or directory defines the permissions for all users in that group.

Others:

All other users who are not the owner and not members of the group.

These permissions apply to anyone else accessing the file or directory.

The combination of read, write, and execute permissions for each of these entities is represented by a three-character string. For example, the permission string "rw-r--r--" indicates that the owner has read and write permissions, the group has read-only permissions, and others have read-only permissions.

chmod u+r file.txt # Add read permission for the owner

chown user:group file.txt # Change owner to 'user' and group to 'group'

chgrp newgroup file.txt # Change the group ownership to 'newgroup'How do you manage processes in Linux?

Managing processes in Linux is a fundamental aspect of system administration. Processes are instances of executing programs, and monitoring and controlling them is crucial for system performance and stability. Here are some commonly used commands and techniques for managing processes in Linux:

Viewing Processes:

# The ps command provides a snapshot of currently running processes

ps aux

ps -efMonitoring Processes in Real-Time:

The top command displays real-time information about system processes, including resource usage and system statistics. It's interactive and can be particularly useful for monitoring system performance.

Killing Processes:

The kill command is used to terminate processes. You need to provide the process ID (PID) of the target process. For example:

kill PIDThe pkill command allows to send signals to processes based on their name. For example, to kill a process named "example":

pkill exampleBackground and Foreground Processes

bg and fg: Used to move processes to the background or foreground. For example

bg %1 # Move the job with job ID 1 to the background

fg %2 # Move the job with job ID 2 to the foregroundJob Control

jobs: Displays a list of current jobs and their statuses.

bg and fg: Move jobs to the background or foreground.

Ctrl + Z: Suspends a foreground job.

Nice and Renice

nice: Adjusts the priority of a process. Lower values increase priority.

nice -n 10 commandrenice: Changes the priority of an already running process.

renice 10 -p PIDProcess Information

pgrep: Finds the process ID of a program. For example

pgrep firefoxForeground and Background Execution

nohup: Allows a command to continue running after the user logs out.

nohup command &disown: Removes a process from the shell's job table, preventing it from receiving signals from the shell.

Systemd and Service Management

On modern Linux distributions using systemd, you can manage processes and services using commands like systemctl. For example:

systemctl start service-name

systemctl stop service-name

systemctl restart service-nameDescribe the different types of storage in Linux.

Understanding and selecting the appropriate storage type depends on the specific requirements of the system, including performance needs, data durability, scalability, and budget considerations. Linux's versatile storage support allows administrators to tailor storage solutions to match the demands of different applications and workloads.

Linux supports various types of storage technologies, offering flexibility and scalability for different use cases. Here are the primary types of storage in Linux:

Hard Disk Drives (HDD): Traditional spinning hard disk drives are one of the oldest and most common storage devices. They consist of rotating magnetic disks and read/write heads. Linux treats HDDs like any other block storage device.

Solid State Drives (SSD): SSDs use NAND-based flash memory to store data. They are faster, more durable, and consume less power than HDDs. Linux treats SSDs similarly to HDDs, and the filesystems and partitioning schemes remain consistent.

Network Attached Storage (NAS): NAS involves storing data on dedicated network-attached devices. These devices, often running specialized NAS operating systems, are accessible over a network. Linux supports various network protocols such as NFS (Network File System) and SMB (Server Message Block) to connect to NAS devices.

Storage Area Network (SAN): SAN is a dedicated high-speed network that connects and provides access to block-level storage devices. Linux supports SAN technologies like iSCSI (Internet Small Computer System Interface) to enable remote access to block storage devices.

USB Drives and External Storage: Linux can easily recognize and mount USB drives and external storage devices. These devices typically use the USB interface and can be used for data transfer, backups, and portable storage.

RAID (Redundant Array of Independent Disks): RAID involves combining multiple physical drives into a logical unit for redundancy, performance improvement, or both. Linux supports various RAID levels, such as RAID 0, RAID 1, RAID 5, and more, providing different trade-offs between performance and data protection.

Logical Volume Management (LVM): LVM allows for the creation of logical volumes that can span multiple physical volumes. It provides flexibility in resizing and managing storage resources dynamically. Linux administrators can use LVM to create, resize, and move logical volumes easily.

Filesystems: Filesystems are crucial for organizing and managing data on storage devices. Linux supports various filesystems, including ext4 (the default for many Linux distributions), XFS, Btrfs, and more. Each filesystem has its own features, performance characteristics, and use cases.

RAM Disks: Linux can create a RAM disk, a portion of RAM used as if it were a disk drive. This volatile storage is extremely fast but loses its data when the system is powered off. RAM disks are useful for temporary and highly performant storage needs.

Cloud Storage: Linux seamlessly integrates with cloud storage solutions, such as Amazon S3, Google Cloud Storage, or Azure Blob Storage. Cloud storage allows users to store and retrieve data from remote servers over the internet, providing scalable and distributed storage solutions.

Explain the concept of daemons and services in Linux.

Daemon

A daemon is a background process that runs independently of user interaction. Daemons typically start during the system boot process and continue to operate throughout the system's uptime. Daemons perform various tasks, such as handling system events, managing hardware components, or providing network services. Examples of common daemons include sshd for SSH, httpd for web servers, and cron for scheduled tasks. Daemons often detach from the terminal, run in the background, and are managed by init systems like System V init, Upstart, or systemd. They usually have a d appended to their names, like rsyslogd or kerneld.

Service

The term "service" is often used interchangeably with "daemon" in the context of Linux. A service is essentially a daemon that provides specific functionality or features on a system. Services can be system-level, managing core components, or user-level, catering to specific applications or tasks. System-level services include those responsible for networking (e.g., networking), logging (rsyslog), and time synchronization (ntpd). User-level services include applications like web servers (apache2), databases (mysql or postgresql), and other software that offers functionalities to users or other applications.

How do you automate tasks in Linux?

Automating tasks in Linux can significantly improve efficiency and reduce manual workload. Here are several methods and tools commonly used for automating tasks in Linux:

Shell Scripts

Shell scripting is a powerful way to automate tasks using the command-line interface.

Write scripts using bash, sh, or other shell languages to sequence commands, perform conditional checks, and iterate through tasks.

Save scripts with a .sh extension and make them executable using chmod +x script.sh.

Run scripts manually or schedule them using cron.

Cron Jobs

The cron scheduler allows you to automate recurring tasks at specific intervals.

Edit the crontab file using crontab -e to specify when and how often a script or command should run.

Use predefined schedules (e.g., daily, weekly) or specify custom cron expressions.

Systemd Timers

Systemd, the modern init system used by many Linux distributions, has its own timer functionality.

Create timer units and associate them with service units using systemd.

Timer units are defined in files with a .timer extension.

Ansible

Ansible is a powerful automation tool that uses a declarative language to define tasks.

Write playbooks in YAML to describe automation tasks.

Ansible connects to remote hosts over SSH and can perform various tasks, including package installation, file copying, and configuration management.

Automation Tools (Puppet, Chef, SaltStack)

Configuration management tools like Puppet, Chef, and SaltStack are designed to automate infrastructure provisioning and management.

Define infrastructure as code, allowing for consistent and repeatable setups.

These tools use a client-server model where a central server manages configurations on multiple nodes.

Scheduled Tasks with at

The at command allows you to schedule tasks to run once at a specific time.

Use at to schedule a one-time task or a script to run at a later time.

Example: echo "command" | at 2:30 PM

Watchdog (Systemd Service)

Systemd's watchdog feature can be used to monitor and restart services automatically if they become unresponsive.

Configure a service's watchdog settings in its systemd unit file.

Logrotate

Logrotate is a utility designed to manage log files by automatically rotating, compressing, and deleting old logs.

Configuration files for logrotate are typically found in /etc/logrotate.conf and /etc/logrotate.d/.

Task Schedulers (at, batch)

The at and batch commands allow you to schedule tasks to run at a later time.

at schedules tasks for a specific time, while batch runs tasks when the system load is low.

SSH and Key-Based Authentication

Automate tasks across multiple servers using SSH with key-based authentication.

Generate SSH key pairs, copy public keys to remote servers, and use SSH in scripts or automated processes.

Inotify Tools

Inotify is a Linux kernel feature that allows monitoring changes to files and directories in real-time.

Inotify tools like inotifywait and inotifywatch can be used to trigger actions in response to file system events.

Custom systemd Services

Create custom systemd service units to automate tasks that need to run continuously or on demand.

Define service units in files with a .service extension.

Custom udev Rules

udev is a device manager for the Linux kernel. Custom rules can be defined to trigger specific actions when devices are connected or disconnected.

Use udev rules to automate tasks based on hardware events.

What are some security best practices for managing Linux systems?

Keep software and packages updated: Regularly applying security patches and updates helps minimize vulnerabilities.

Use strong passwords and enforce password complexity: Implement password policies requiring long, complex passwords and regular changes.

Disable unnecessary services: Reduce attack surface by disabling unused services.

Monitor logs and audit system activity: Watch for suspicious activities and potential intrusions.

Restrict root access: Use sudo for specific commands instead of granting root access directly.

How do you troubleshoot network connectivity issues in Linux?

Verify IP configuration: Check if IP address, subnet mask, and gateway are correctly set.

Ping to test connectivity: Use ping to test reachability to other hosts.

Network interface status: Check status of network interfaces with ifconfig or ip addr.

Route table verification: Ensure routing table is correct with route -n.

Network diagnostics tools: Use tools like traceroute and tcpdump for further analysis.

Use traceroute for further analysis on packet drop, topology, etc.

Describe the role of SELinux in Linux security.

SELinux (Security-Enhanced Linux) is a Linux kernel security module that provides a mandatory access control (MAC) framework to enforce fine-grained access policies. Developed by the National Security Agency (NSA) and released as open-source software, SELinux enhances Linux security by adding an additional layer of access controls beyond the traditional discretionary access control (DAC) mechanisms.

Here are key aspects of the role of SELinux in Linux security:

Mandatory Access Control (MAC)

SELinux introduces the concept of mandatory access control, which is in contrast to the discretionary access control (DAC) provided by traditional Unix/Linux file permissions (owner, group, and others).

In DAC, file owners decide who can access their files. In SELinux, access is determined by policies configured by administrators and enforced by the kernel.

Security Policies

SELinux defines security policies that govern the behavior of processes and users on the system.

Policies specify the allowed or denied actions for different subjects (processes or users) on different objects (files, directories, devices, etc.).

Policies are written in a language known as Security Policy Language (SELinux Policy Language).

Labels and Contexts

SELinux assigns security labels and contexts to various system objects, such as files, processes, and network ports.

Labels are used to convey information about the security attributes of an object. For example, a file's label might include information about the type of content it holds and the processes that are allowed to access it.

Enforcing and Permissive Modes

SELinux operates in two modes: enforcing and permissive.

In enforcing mode, SELinux actively enforces security policies, denying actions that violate the policies.

In permissive mode, SELinux logs policy violations but does not block actions. This mode is often used during policy development and testing to identify potential issues without affecting system operation.

Context-Based Policies

SELinux policies are context-based, meaning that access decisions depend on the context in which a process or user operates.

For example, even if a user has read access to a file in traditional Unix permissions, SELinux may deny access based on the security context of the process attempting to access the file.

Preventing Unauthorized Access

SELinux helps prevent common security vulnerabilities by restricting processes and users from performing actions that could lead to system compromise.

By enforcing the principle of least privilege, SELinux minimizes the potential impact of security breaches.

Integration with Applications and Services

SELinux policies can be tailored to specific applications and services, allowing administrators to define precisely what actions each application is allowed to perform.

Many Linux distributions come with predefined SELinux policies for popular services, and administrators can customize these policies based on their specific deployment needs.

Audit Logging

SELinux provides detailed audit logging, allowing administrators to review and analyze security-related events.

Audit logs include information about policy violations, denied access attempts, and other security-relevant events.

Flexible Configuration

Administrators can configure and customize SELinux policies to accommodate the specific security requirements of their systems.

Tools like semanage and audit2allow assist in managing SELinux policies.

While SELinux enhances the security posture of Linux systems, its implementation requires a good understanding of security policies and contexts. Administrators need to be familiar with SELinux configuration and troubleshooting techniques to effectively deploy and manage SELinux on their systems. When properly configured, SELinux significantly contributes to the overall security and integrity of Linux-based environments.

What are some popular Linux distributions and their key differences?

Ubuntu

Target Audience: General users, developers, and beginners.

Package Management: Uses Debian package management (APT).

Desktop Environment: Default desktop environment is GNOME, but variants like Kubuntu (KDE), Xubuntu (Xfce), and Lubuntu (LXQt) are available.

Release Cycle: Regular releases every six months with long-term support (LTS) versions every two years.

Debian

Target Audience: General users, developers, and system administrators.

Package Management: Uses Debian package management (APT).

Stability: Known for stability and reliability.

Release Cycle: Stable releases occur when the Debian Release Team considers the distribution ready.

Fedora

Target Audience: Developers and enthusiasts.

Package Management: Uses RPM package management (DNF).

Desktop Environment: Default desktop environment is GNOME, but supports various others.

Release Cycle: Frequent releases with new features. Serves as a testing ground for technologies that may later appear in Red Hat Enterprise Linux.

CentOS

Target Audience: Enterprises and servers.

Package Management: Uses RPM package management (YUM).

Relation to RHEL: CentOS is a downstream, community-supported version of Red Hat Enterprise Linux (RHEL) without the official support but with the same packages and features.

Arch Linux

Target Audience: Advanced users and enthusiasts who prefer a minimalistic and customizable system.

Package Management: Pacman package management.

Installation: Follows a rolling release model and has a hands-on installation process where users build their system step by step.

openSUSE

Target Audience: General users, developers, and administrators.

Package Management: Uses RPM package management (zypper).

Desktop Environment: openSUSE offers both openSUSE Leap (based on SUSE Linux Enterprise) and openSUSE Tumbleweed (rolling release).

YaST: Features YaST, a comprehensive configuration tool.

Mint

Target Audience: Users who prefer a user-friendly and familiar environment (especially those transitioning from Windows).

Desktop Environment: Default desktop environment is Cinnamon, but variants include MATE and Xfce.

Package Management: Based on Ubuntu and uses APT.

Gentoo

Target Audience: Enthusiasts who want a highly customizable and optimized system.

Package Management: Portage package management, where software is compiled locally from source code.

Rolling Release: Follows a rolling release model, where software is continuously updated.

Slackware

Target Audience: Users who appreciate simplicity and stability.

Package Management: Uses its own packaging tool (pkgtool) and package format (.tgz).

Minimalistic Approach: Known for its simplicity and lack of automation tools.

Kali Linux

Target Audience: Penetration testers, security professionals, and forensic analysts.

Purpose: Specifically designed for cybersecurity tasks and includes a wide range of security tools out of the box.

Based on Debian: Derived from Debian, and follows a rolling release model.

How do you handle disk space management in Linux?

Monitor disk usage: Use df -h to see disk usage per partition.

Identify large files: Use du -sh * to find space-consuming files.

Clean up temporary files: Use rm -rf /tmp/* to remove temporary files.

Log rotation: Manage and rotate logs to prevent disk filling.

LVM for flexibility: Use Logical Volume Management for dynamic resizing and pooling of disks.

#Use the df command to display disk space usage on mounted filesystems: df -h

#Use the du command to estimate the space used by a directory and its subdirectories:

du -h /path/to/directory

#Use the find command to identify large files on the system:

find / -type f -size +100M -exec ls -lh {} \;

#Remove temporary files, log files, or other unnecessary files using the rm command:

rm /path/to/file

#Empty or truncate log files without disrupting running services:

echo > /var/log/syslog

#Use log rotation tools like logrotate to manage log files and prevent them from consuming excessive disk space.

logrotate -f /etc/logrotate.conf

#Some package managers have their own cache directories. Clear them to free up space:

rm -rf /var/cache/pacman/pkg/* # For Pacman (Arch Linux)Explain the differences between physical and virtual memory in Linux.

Physical memory (RAM) and virtual memory are two distinct concepts in Linux, each serving different purposes in the overall memory management of a system. Here are the key differences between physical and virtual memory:

Physical Memory (RAM)

Definition: Physical memory, often referred to as RAM (Random Access Memory), is the actual hardware component where data is temporarily stored for quick access by the CPU.

Role: RAM is used to store data and instructions that the CPU actively uses during the execution of processes and applications.

Speed: Accessing data from RAM is faster compared to accessing data from other storage devices like hard drives.

Size: The size of physical memory is limited by the hardware and is typically measured in gigabytes (GB) or terabytes (TB).

Virtual Memory

Definition: Virtual memory is a memory management technique that uses a combination of physical memory (RAM) and disk space to provide the illusion of a larger memory space than physically available.

Role: Virtual memory allows the operating system to use disk space as an extension of physical memory, allowing more applications to run simultaneously without requiring all data to fit into RAM.

Page File (Swap Space): The portion of the disk space used for virtual memory is often referred to as the "page file" or "swap space."

Paging and Swapping: When the physical RAM becomes insufficient, the operating system swaps less frequently used data from RAM to the disk and brings in the required data when needed. This process is known as paging and swapping.

Slower Access: Accessing data from virtual memory on the disk is slower compared to accessing data from physical RAM.

How do you configure and manage network interfaces in Linux?

View network interfaces: Use ip addr or ifconfig to list available interfaces and their statuses.

Configure IP address: Use ifconfig or ip addr with specific options to set IP address, netmask, and gateway.

Manage interfaces: Use tools like nmcli or network manager GUI to enable, disable, and configure additional settings.

Explain the purpose of firewalls in Linux.

A firewall is a crucial component of a network security strategy that helps control and monitor incoming and outgoing network traffic. Its primary purpose is to establish a barrier between a trusted internal network and untrusted external networks, preventing unauthorized access and protecting against potential security threats. In Linux, the most common firewall solutions are iptables and its successor nftables, as well as user-friendly frontends like UFW (Uncomplicated Firewall). Purpose of Firewalls in Linux:

Access Control

Firewalls control access to network resources by defining rules that specify which types of traffic are allowed or denied.

They filter packets based on various attributes such as source/destination IP addresses, ports, and protocols.

Security Policy Enforcement

Firewalls enforce a security policy that dictates how network traffic should be treated.

Policies can be configured to allow or deny traffic based on specific criteria, helping to mitigate potential security threats.

Packet Filtering

Firewalls inspect each packet that traverses the network and make decisions on whether to permit or block it based on predefined rules.

Packet filtering is an essential function for protecting against unauthorized access and potential attacks.

Network Address Translation (NAT)

Firewalls often perform NAT, translating private IP addresses used within an internal network to a single public IP address for communication with external networks.

NAT helps conceal the internal network structure and conserves public IP addresses.

Logging and Auditing

Firewalls maintain logs of network activity, allowing administrators to review and analyze traffic patterns, identify potential security incidents, and troubleshoot issues.

Logging is crucial for monitoring and maintaining the security of the network.

Describe the different types of Linux backup solutions.

Linux offers a variety of backup solutions, each with its own features and use cases. Choosing the right backup solution depends on factors such as the size of data, recovery time objectives, storage capacity, and overall backup strategy. Here are different types of Linux backup solutions:

File-Based Backups:

tar and cp: The tar command and cp command can be used to create file-based backups by creating archives of directories and files.

tar -cvzf backup.tar.gz /path/to/source

cp -r /path/to/source /path/to/backuprsync:

rsync is a powerful tool for file synchronization and backup. It efficiently transfers and synchronizes files between local and remote systems.

rsync -av /path/to/source/ /path/to/backup/Full Backup:

A full backup involves copying all selected files and directories, regardless of whether they have changed since the last backup.

tar -cvzf full_backup.tar.gz --directory=/path/to/source .Incremental Backup:

Incremental backups only copy files that have changed since the last backup. Each subsequent backup only includes changes made since the last backup, reducing storage requirements.

tar -cvzf incremental_backup.tar.gz --directory=/path/to/source --listed-incremental=incremental.snar .Differential Backup:

Differential backups copy all changes made since the last full backup. While similar to incremental backups, differential backups do not rely on previous backup sets.

rsync -av --link-dest=/path/to/previous_full_backup /path/to/source/ /path/to/differential_backup/Snapshot-Based Backups:

Snapshot-based backups capture a point-in-time snapshot of a filesystem. Tools like LVM (Logical Volume Manager) or Btrfs can be used to create and manage snapshots.

lvcreate --snapshot --name=snapshot_name --size=5G /dev/vg_name/lv_nameCloud Backups:

Cloud backup solutions involve storing data in remote cloud storage services. Tools like rclone or native cloud provider tools can be used.

rclone copy /path/to/source remote:backup_destinationDatabase Backup:

mysqldump and pg_dump: For MySQL and PostgreSQL databases, tools like mysqldump and pg_dump can be used to export database contents.

mysqldump -u username -p dbname > backup.sql

pg_dump -U username -d dbname > backup.sqlHow do you monitor system performance and resource utilization in Linux?

System resources: Use top or htop to monitor CPU, memory, disk, and network usage in real-time.

Process details: Use ps or pstree to view running processes and their resource consumption.

Log analysis: Analyze system logs like /var/log/messages for performance indicators and potential issues.

What are some essential troubleshooting methods for Linux system issues?

Identify symptoms: Carefully analyze error messages, user reports, and system behavior.

Gather logs: Check relevant system logs like /var/log/messages for clues.

Consult documentation: Refer to official documentation or online resources for specific troubleshooting steps.

Use diagnostic tools: Utilize tools like ping, traceroute, netstat, etc., for network problems.

Seek community support: Engage in online forums and communities for further assistance if needed.

Describe your experience with automation tools in Linux administration.

Share your expertise and experience with tools like:

Shell scripting: Automate repetitive tasks with bash scripts.

Ansible/Puppet/Chef: Configuration management tools for large-scale infrastructure.

Cron jobs: Schedule automated tasks to run at specific times.

Monitoring tools: Automate system monitoring and alerting for proactive troubleshooting.

Remember: The specific answer will depend on your personal experience and skillset. Highlight your knowledge and proficiency with relevant tools and examples.

What are your preferred methods for monitoring and optimizing security in Linux systems?

Security tools and utilities: Utilizing tools like iptables, fail2ban, tripwire, and log analysis frameworks for intrusion detection and prevention.

Vulnerability management: Regularly scanning systems for vulnerabilities, applying security patches promptly, and maintaining up-to-date software versions.

System hardening: Implementing security best practices like strong passwords, restricted access, and secure configurations to minimize attack surface.

How do you stay updated on the latest developments and trends in Linux technologies?

Training and certifications: Pursuing relevant Linux certifications or enrolling in online courses to enhance your skills and knowledge.

Online communities and forums: Participating in discussions, reading blogs, and attending online events to keep up with new technologies and best practices.

Industry publications and news sources: Subscribing to newsletters, podcasts, and technology magazines to stay informed about evolving trends.