🧩 API Gateway: The Traffic Controller of Modern Architectures

By DevTonics – Developer Cheatsheets & System Design Simplified

In today’s world of microservices, an API Gateway is the silent hero sitting between your client and a jungle of backend services. It’s often a hot topic in system design interviews and a real-world must-know for developers and DevOps engineers.

Let’s break it down in DevTonics style 👇

🚪 What is an API Gateway?

An API Gateway acts as a reverse proxy that receives client requests, processes them, and routes them to the appropriate backend services.

Think of it as a traffic controller for all your API calls.

It handles cross-cutting concerns like authentication, rate limiting, logging, load balancing, and more — all in one place.

🛠 Why Do We Use an API Gateway?

Here’s what it gives you out of the box:

✅ Central entry point to your backend

✅ Handles routing and request transformation

✅ Enforces security policies (Auth, OAuth, API keys)

✅ Performs rate limiting & throttling

✅ Supports caching and response transformation

✅ Logs requests and metrics

✅ Prevents direct access to internal services

Without it, each client has to interact with each microservice directly — leading to chaos and tight coupling.

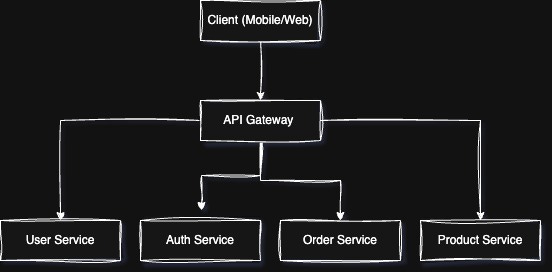

🗺 Architecture: Where It Sits

A typical flow looks like:

It abstracts internal APIs, so clients don’t need to know how the backend is structured.

⚙️ Popular API Gateway Tools

Here are some tools commonly used in real-world systems:

AWS API Gateway – Scales well, native to AWS ecosystem

Kong – Open-source and widely adopted

NGINX – Lightweight and flexible

Apigee (by Google) – Enterprise-grade features

Traefik – Great for Kubernetes

Zuul (by Netflix) – Java-based and battle-tested

📚 API Gateway vs Load Balancer – What's the Difference?

Here’s how they compare, without the table:

🔁 Load Balancer:

Works at Layer 4 (TCP) or Layer 7 (HTTP)

Distributes traffic across servers

Doesn’t understand application logic

No built-in support for authentication, rate limiting, etc.

🧠 API Gateway:

Works at the Application Layer (Layer 7)

Understands HTTP methods, routes, and request data

Can handle authentication, authorization, rate limiting, and more

Ideal for microservices and modern apps

TL;DR: Load Balancer = "Where to send traffic?"

API Gateway = "What is this request and how should I handle it?"

💬 API Gateway in System Design Interviews

API Gateways are common in FAANG-level and SaaS-scale interview problems. Here's how you might be asked:

🔍 Top Interview Questions (With Short Answers)

1. Why use an API Gateway in a microservices architecture?

To centralize access, apply consistent policies, and abstract service complexities from clients.

2. How does an API Gateway help with rate limiting?

It tracks incoming requests per IP/token and enforces limits to prevent abuse.

3. What happens if the API Gateway fails?

It’s a single point of failure. Mitigate using horizontal scaling + failover replicas + caching.

4. How does the API Gateway handle authentication?

It verifies tokens (JWT, OAuth2) before forwarding the request to downstream services.

5. How is API Gateway different from a service mesh?

API Gateway = north-south traffic (client to service)

Service Mesh = east-west traffic (service to service)

6. Can API Gateways cache responses?

Yes, especially for GET requests. This reduces load and improves speed.

7. Where should API Gateway sit in a scalable architecture?

Just behind the CDN (like Cloudflare), before the load balancer or services.

8. What are some risks or cons?

Latency added if not optimized

Complexity

Potential SPOF

Costs (especially with managed gateways)

🧠 Final Thoughts: Why You Should Know This

Understanding API Gateways is key for:

Building scalable microservice-based systems

Performing well in system design interviews

Designing secure and efficient APIs

Integrating DevOps and observability best practices

Follow DevTonics.in or @DevTonics on Instagram for weekly drops on:

DevOps

System Design

Career & Tech Interview Prep